Kubernetes Overview

With the widespread adoption of containers among organizations, Kubernetes, the container-centric management software, has become a standard to deploy and operate containerized applications and is one of the most important parts of DevOps.

Originally developed at Google and released as open-source in 2014. Kubernetes builds on 15 years of running Google's containerized workloads and the valuable contributions from the open-source community.

What is Kubernetes?

Kubernetes(K8s) is an open-source containerization orchestration tool that helps you to orchestrate and manage your containers infrastructure on-premises or in the cloud.

The purpose of K8s is to host an application in the form of containers in an automated fashion, so that we can easily deploy as many instances of our application as required and enable communication between different services within the application.

So, you will be thinking why we call it K8s? Basically when you count the number of letters in Kubernetes, between K and S there are 8 letters. So, as a short form we write K8s instead of writing Kubernetes as a whole.

What are the benefits of using K8s?

Some of the main benefits of using Kubernetes include:

Scalability: Kubernetes makes it easy to scale applications up or down by adding or removing containers as needed. This allows applications to automatically adjust to changes in demand and ensures that they can handle large volumes of traffic.

High availability: It automatically ensures that applications are highly available by scheduling containers across multiple nodes in a cluster and automatically replacing failed containers. This helps prevent downtime & ensures that applications are always available to users.

Improved resource utilization: Kubernetes automatically schedules containers based on the available resources, which helps to improve resource utilization and reduce waste. This can help reduce costs and improve the efficiency of your applications.

Easy deployment & updates: It makes it easy to deploy & update applications by using a declarative configuration approach. This allows developers to specify the desired state of their applications & it will automatically ensure that the actual state matches the desired state.

Portable across cloud providers: It is portable across different cloud providers, which means that you can use the same tools & processes to manage your applications regardless of where they are deployed. This can make it easier to move applications between cloud providers.

Auto-Healing: K8s automatically detects and replaces failed containers, improving application reliability.

Service Discovery and Load Balancing: It handles routing traffic to the right containers and balances the load, ensuring even distribution.

Security: Kubernetes provides tools for managing secrets, controlling access, and enforcing network policies to enhance application security.

Declarative Configuration: You define your application's desired state, and K8s ensures that state is maintained, reducing manual configuration.

Monolithic vs Microservice Architecture

Monolithic architecture is a classical approach we can use while building software applications. It was the dominant approach to software development for many years and is still widely used today.

The monolithic architecture couples and runs all the application’s components as a single, unified system. Therefore, any modifications made to one part of the application can potentially impact the entire system.

A microservices architecture presents a different methodology for developing software applications compared to classical approaches. It addresses the limitations of monolithic architecture.

In the microservices architecture, the application is broken down into small, independent services that communicate with each other using well-defined APIs.

Furthermore, each microservice is responsible for a specific feature or functionality of the application, and each service can be developed, deployed, and scaled independently of the other services. Therefore, allowing for greater flexibility and agility in developing and maintaining the application.

Kubernetes Architecture

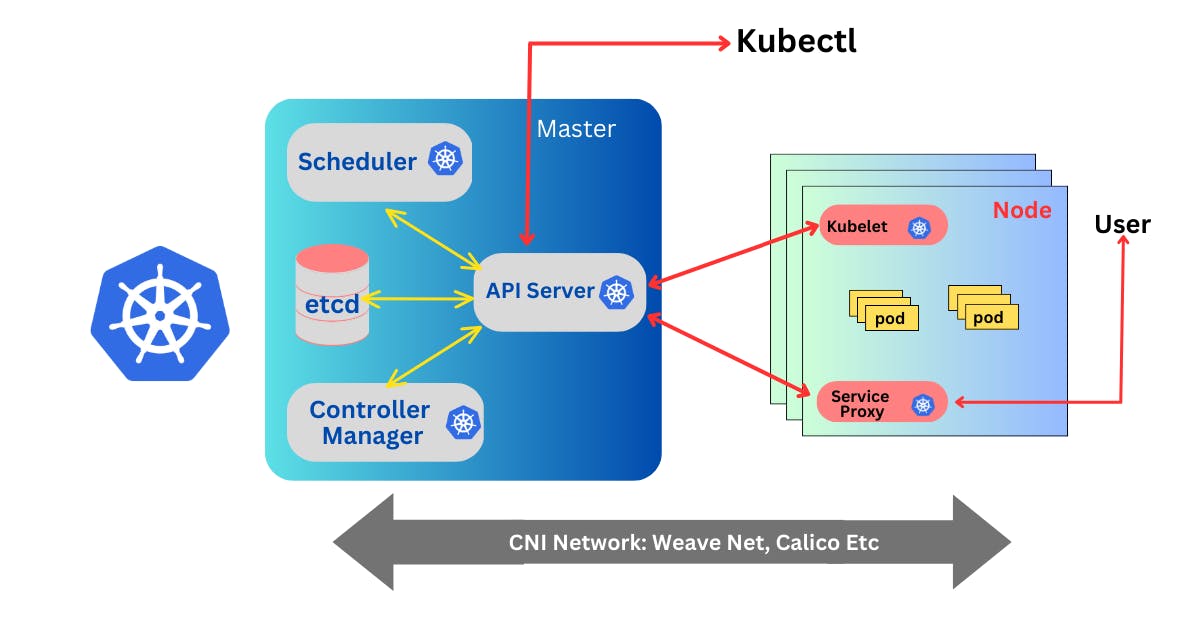

Kubernetes follows a client-server architecture.

A Cluster is a group of servers that means you must have minimum of 2 servers, as you can see in the above diagram we have one master server and one node server.

A Kubernetes cluster is a form of Kubernetes deployment architecture.

Basic Kubernetes architecture exists in two parts:

The control plane/master node and

The nodes/worker nodes.

All the work will be done on the worker nodes and that worker nodes will be managed by master node.

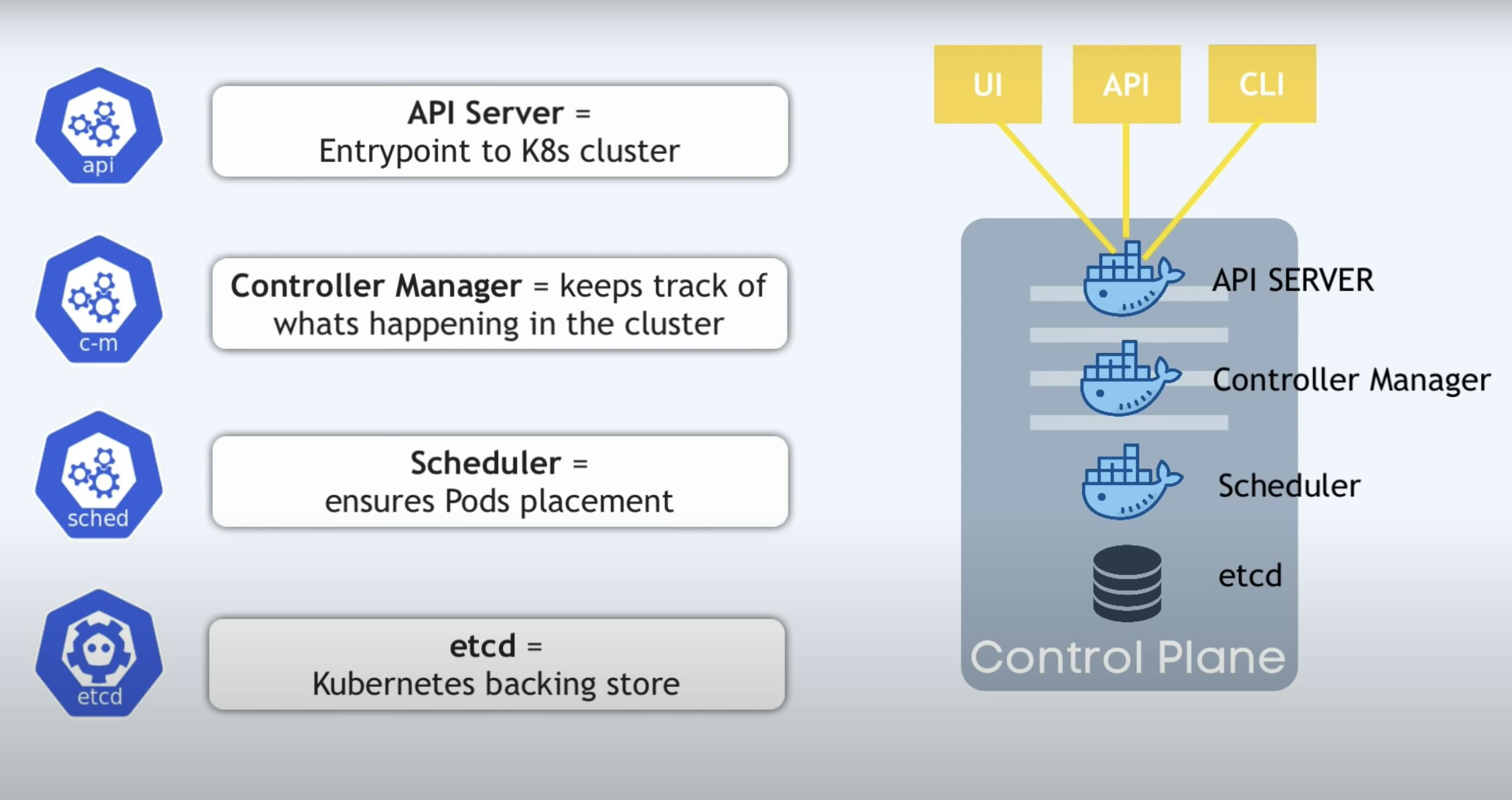

What is the Kubernetes Control Plane?

The control plane is the nerve center that houses Kubernetes cluster architecture components that control the cluster. It also maintains a data record of the configuration and state of all of the cluster’s Kubernetes objects.

The Kubernetes control plane is in constant contact with the compute machines to ensure that the cluster runs as configured. Controllers respond to cluster changes to manage object states and drive the actual, observed state or current status of system objects to match the desired state or specification.

Components of K8s Control Plane

The components of Control plane includes: -

Kubernetes API Server: The front end of the K8s control plane, the API Server supports updates, scaling, and other kinds of lifecycle orchestration by providing APIs for various types of applications.

It provides a REST API that enables users to interact with the cluster and manage its resources. It acts as the primary interface for users and external systems to interact with the cluster and perform actions such as creating or updating deployments, scaling applications, or monitoring the state of the cluster.

Kubernetes Scheduler: The K8s scheduler stores the resource usage data for each compute node determines whether a cluster is healthy, new containers should be deployed, and if so, where they should be placed.

The scheduler considers the health of the cluster generally alongside the pod’s resource demands, such as CPU or memory. Then it selects an appropriate compute node and schedules the task, pod, or service, taking resource limitations or guarantees, data locality, the quality of the service requirements, anti-affinity and affinity specifications, and other factors into account.Kubernetes Controller Manager: The controller manager sometimes called as "Cloud Controller Manager" or simply "Controller" is a daemon which runs the Kubernetes cluster using several controller functions.

The controller watches the objects it manages in the cluster as it runs the Kubernetes core control loops. It observes them for their desired state and current state via the API server. If the current and desired states of the managed objects don’t match, the controller takes corrective steps to drive object status toward the desired state. The Kubernetes controller also performs core lifecycle functions.

ETCD: Distributed and fault-tolerant, etcd is an open source, key-value store database that stores configuration data and information about the state of the cluster. etcd may be configured externally, although it is often part of the Kubernetes control plane.

etcd is also used to store configuration details such as ConfigMaps, subnets, and Secrets, along with cluster state data.

What is the Worker Nodes?

Managed by the control plane, cluster nodes are machines that run containers. Each node runs an agent for communicating with the control plane, the Kubelet - the primary Kubernetes controller. Each node also runs a container runtime engine, such as Docker. The node also runs additional components for monitoring, logging, service discovery, and optional extras.

Each node could be either a physical or virtual machine and is its own Linux environment. Every node also runs pods, which are composed of containers.

A Kubernetes cluster must have at least one compute node, although it may have many, depending on the need for capacity.

Components of the Worker Nodes

The components of Worker Nodes includes:

Kubelet: Kubelet is the agent that runs on each n ode in the cluster. When the control plane requires a specific action happen in a node, the Kubelet receives the pod specifications through the API server and executes the action. It then ensures the associated containers are healthy and running.

Service-Proxy/Kube-Proxy: Each compute node contains a network proxy called a Kube-proxy that facilitates Kubernetes networking services. It either forwards traffic itself or relies on the packet filtering layer of the operating system to handle network communications both outside and inside the cluster.

The Kube-proxy runs on each node to ensure that services are available to external parties and deal with individual host subnetting. It serves as a network proxy and service load balancer on its node, managing the network routing for UDP and TCP packets. In fact, the Kube-proxy routes traffic for all service endpoints.

Container Runtime: The container runtime, such as Docker, is responsible for running the containers that make up the pods.

When a pod is scheduled on a worker node, the Kubernetes kubelet communicates with the container runtime to start the containers that make up the pod. The container runtime is responsible for creating the containers and setting up their networking and storage resources, as well as monitoring their health and resource usage.

Other Components

Kubectl is a command line tool that you use to communicate with the Kubernetes API server. The Kubectl binary is available in many operating system package managers. Using a package manager for your installation is often easier than a manual download and install process.

Container Network Interface(CNI) is a specification and library for configuring network interfaces in Linux containers. The main purpose of CNI is to allow different networking plugins to be used with container runtimes. This allows Kubernetes to be flexible and work with different networking solutions, such as Calico, Flannel, and Weave Net. CNI plugins are responsible for configuring network interfaces in pods, such as setting IP addresses, configuring routing, and managing network security policies.

Node Controller is responsible for managing Worker Nodes. It will monitor the new Nodes connecting to the cluster, validate the Node's health status based on metrics reported by the Node's Kubelet component, and update the Node's status field. If a Kubelet stops posting health checks to the API Server, the Node Controller will be responsible for triggering Pod eviction from the missing Node before removing the Node from the cluster.

Replication Controller ensures that a specified number of pod replicas are running at any one time. In other words, a Replication Controller makes sure that a pod or a homogeneous set of pods is always up and available.

Explain the role of the API server?

The API server is a core component of the control plane in Kubernetes.

It acts as the primary interface for communication between various Kubernetes components and external entities. The API server exposes the Kubernetes API, which allows users, administrators, and other components to interact with the cluster.

Here are some of the key responsibilities of the API server:

The API server is responsible for managing the overall state and configuration of the Kubernetes cluster.

The API server handles user authentication and authorization.

The API server acts as an endpoint for clients and other Kubernetes components to interact with the cluster. This allows users to create, update, and delete Kubernetes resources, as well as retrieve information about the cluster's state.

The API server performs validation and admission control for incoming API requests.

The API server interacts with the etcd key-value store, which serves as the cluster's data store.

The API server manages the lifecycle of resources and provides a control loop for various controllers running within the control plane.

Difference between Kubectl and Kubelet

Difference between kubectl and kubelet is: -

kubectl:

Purpose:

kubectlis a tool you use to talk to and manage your Kubernetes cluster.Interface: It's like your remote control for Kubernetes. You can use it to give commands to the cluster, like starting or stopping applications.

User-Friendly:

kubectlis designed for people to use, so it has a user-friendly interface and commands.Location: It runs on your computer and connects to the Kubernetes cluster to control it.

Examples: You use

kubectlto create and manage things like applications, services, and configurations in your Kubernetes cluster.

kubelet:

Purpose:

kubeletis like the worker on each computer in your Kubernetes cluster.Node Manager: It makes sure the containers (the parts of your applications) on that computer are running as they should be.

Container Manager: It specifically takes care of starting, stopping, and keeping an eye on the containers.

Node Health:

kubeletchecks if the computer (node) is healthy and tells the main control center if there's a problem.Not for Users: You don't use

kubeletdirectly. It quietly does its job in the background on each computer in the cluster.

In short, kubectl is like your remote control for managing the whole cluster, while kubelet is the worker on each computer, making sure the applications (containers) on that computer run smoothly.

Conclusion

In Conclusion, Kubernetes (K8s) is a powerful open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications.

K8s architecture is built around a master node and multiple worker nodes, forming a distributed system that ensures high availability and fault tolerance.

The master node oversees cluster orchestration, scheduling, and communication, while worker nodes execute tasks and host containers.

The master-node and worker-node structure, combined with essential components like the API server, etcd, controllers, schedulers, service-proxy, and kubelet, form the backbone of K8s. This architecture ensures effective management, automation, and scaling of containerized applications, facilitating high availability and fault tolerance. K8s has become a standard in the container orchestration space, providing a versatile and efficient platform for deploying and managing applications in modern, cloud-native environments.

Monolithic architecture offers simplicity and ease of development but may become unwieldy as the application grows. Microservices provide scalability, flexibility, and fault isolation but come with increased complexity in terms of deployment and management.